Blog

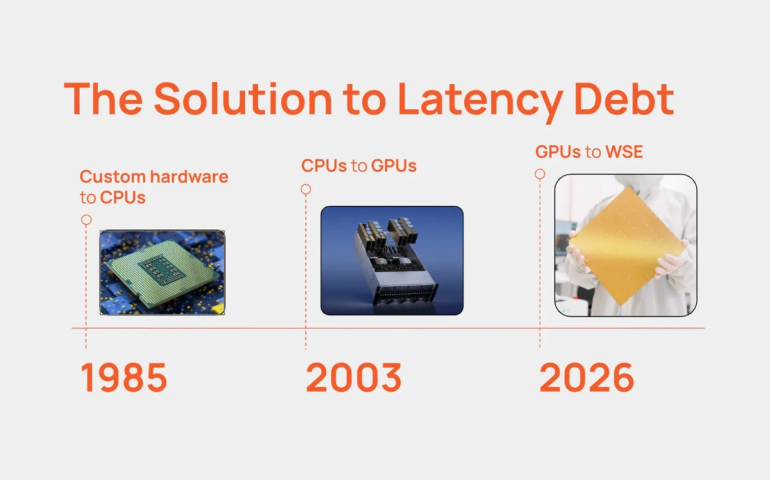

The year of ‘Latency Debt’

January 28, 2026

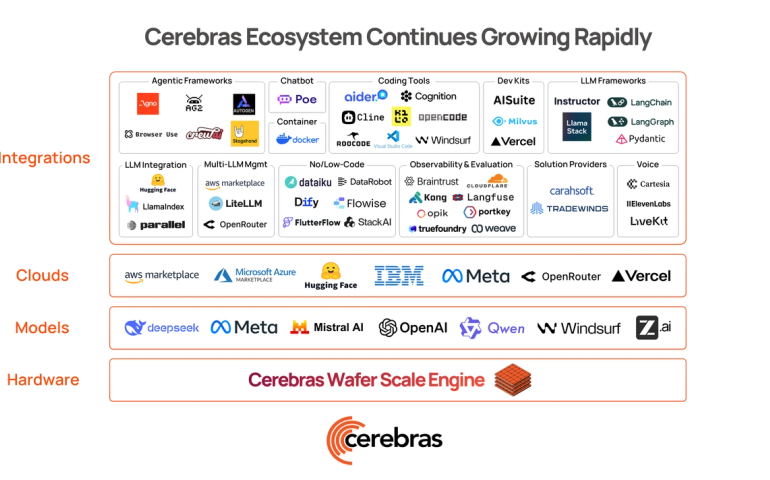

Fast inference is going mainstream — the Cerebras ecosystem is scaling access

January 28, 2026

StackAI × Cerebras: enabling the fastest inference for enterprise AI agents

January 28, 2026

This new model is smarter than Sonnet 4.5…and 20X faster?

January 08, 2026

GLM-4.7: Frontier intelligence at record speed — now available on Cerebras

January 08, 2026

2026: Fast Inference Finds its Groove

January 06, 2026

Thinking Inside the Box: The Implicit Chain Transformer for Efficient State Tracking

December 12, 2025

Case Study - Cognition x Cerebras

December 10, 2025

Jais 2: A Blueprint for Sovereign AI

December 09, 2025

Cerebras at NeurIPS 2025: Nine Papers From Pretraining to Inference

December 04, 2025

Rox × Cerebras: Real-time speed for agentic sales workflows

November 25, 2025

Scaling SWE Agent Data Collection with Dockerized Environments for Execution

November 24, 2025

Scaling Code-Repair Agents with Reinforcement Learning: Extending OpenHands for Real-World Repositories

November 24, 2025

The world’s fastest GLM-4.6 – now available on Cerebras

November 18, 2025

OpenAI GPT-OSS 120B Benchmarked – NVIDIA Blackwell vs. Cerebras

November 06, 2025

Cerebras October 2025 Highlights

November 03, 2025

Building Instant RL Loops with Meta Llama Tools and Cerebras

October 27, 2025

REAP: One-Shot Pruning for Trillion-Parameter Mixture-of-Experts Models

October 16, 2025

MoE Math Demystified: What Does 8x7B Actually Mean?

October 14, 2025

Cerebras Inference: Now Available via Pay Per Token

October 13, 2025

The Fastest AI Datacenters will run on Cerebras: Meet OKC

September 22, 2025

Cerebras API Certification Partner Program for LLM API Providers

September 22, 2025

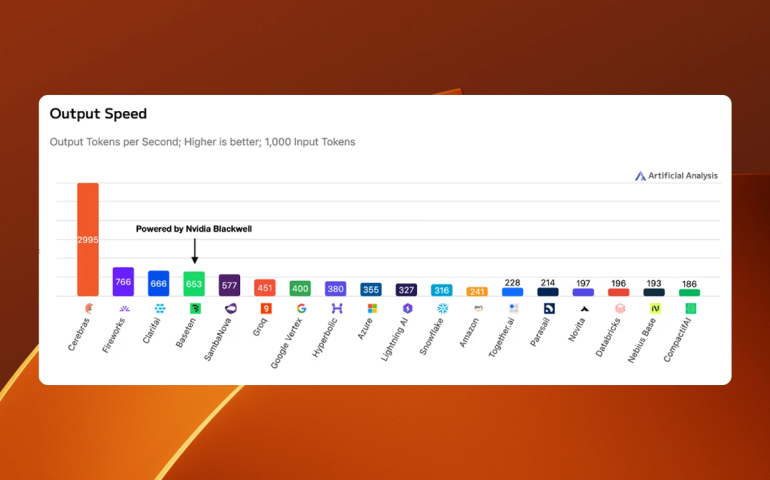

Cerebras CS-3 vs. Nvidia DGX B200 Blackwell

September 19, 2025

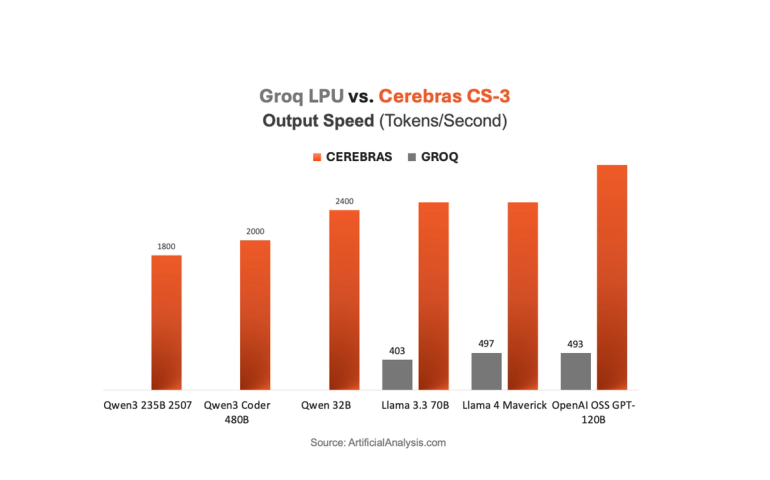

Cerebras CS-3 vs. Groq LPU

September 19, 2025

Cerebras and Docker Compose: Building Isolated AI Code Environments

September 17, 2025

MoE at Scale: Making Sparse Models Fast on Real Hardware

September 03, 2025

Debugging Dead MoE Models: A Step-by-Step Guide

August 19, 2025

OpenAI GPT OSS 120B Runs Fastest on Cerebras

August 06, 2025

Cerebras Launches OpenAI’s gpt-oss-120B at a Blistering 3,000 tokens/sec

August 05, 2025

Router Wars: Which MoE Routing Strategy Actually Works

August 04, 2025

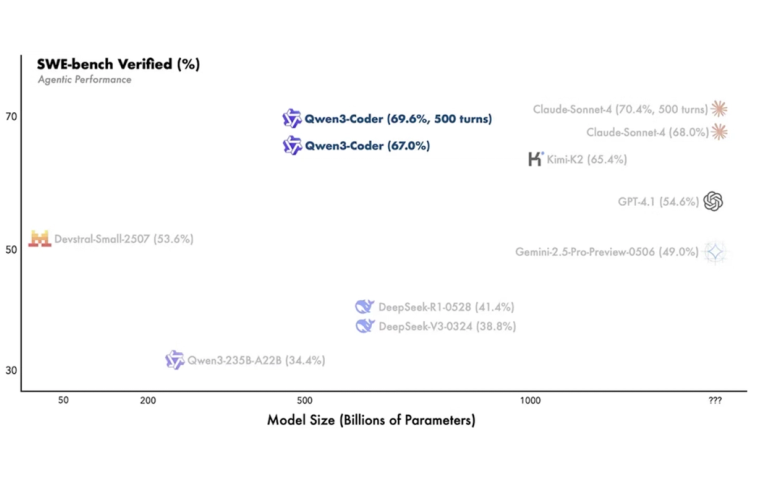

Qwen3 Coder 480B is Live on Cerebras

August 01, 2025

Introducing Cerebras Code

August 01, 2025

From Zero to Sudoku Hero: An RL Adventure

August 01, 2025

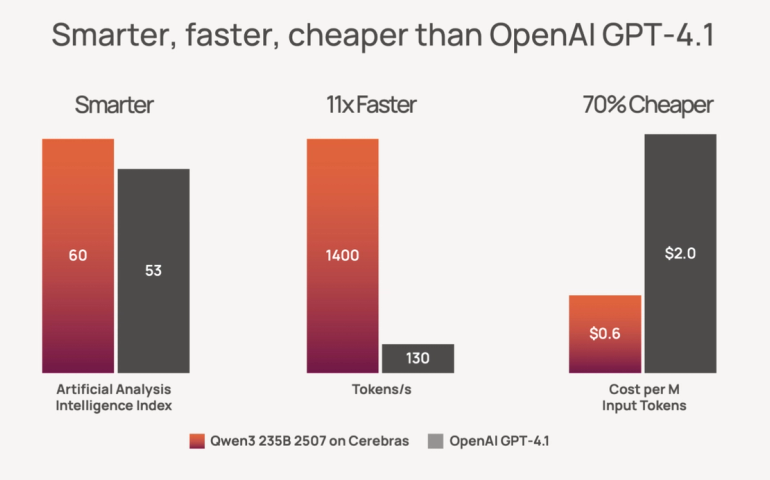

Qwen3 235B 2507 Instruct Now Available on Cerebras

July 29, 2025

MoE Fundamentals: Sparse Models Are the Future

July 22, 2025

Cerebras June Highlights

July 02, 2025

NinjaTech AI: Powering the One-Size-Fits-All AI Agent

July 02, 2025

The Cerebras Scaling Law: Faster Inference Is Smarter AI

June 11, 2025

Cerebras May 2025 Newsletter

June 02, 2025

Bringing Cerebras Inference to Quora Poe’s Fast Growing AI Ecosystem

May 20, 2025

Realtime Reasoning is Here - Qwen3-32B is Live on Cerebras

May 15, 2025

Humanizing Digital Technology with Norby

May 08, 2025

Cerebras Partners with IBM to Accelerate Enterprise AI Adoption

May 06, 2025

Cerebras April Highlights

May 02, 2025

Sei AI - Revolutionizing Financial Services Support with Cerebras-Powered Agents

April 25, 2025

Deep Research with Cerebras and NinjaTech

April 09, 2025

Breaking the Boundaries of Presence: Delphi's Digital Mind Technology

March 26, 2025

Compressing KV cache memory by half with sparse attention

March 24, 2025