Dec 04 2024

AIBI: Revolutionizing Interviews with AI (AI interviews aren't really a thing yet) - Cerebras

AIBI (AI Bot Interviewer) is the first end-to-end AI interview bot that delivers a seamless, real-time interview experience. AIBI can conduct a realistic interview, generating high-quality, real-time technical questions and responses during the live audio interview with no noticeable delay. Using Cerebras inference allowed AIBI to drastically improve its “time to first token,” as well as token generation speed by 66-80%.

As an end-to-end recruiting solution, AIBI handles:

- Generating an initial Google Form job application

- Creating candidate-specific interview questions with an agentic flow

- Conducting a real-time audio interview

- Generating a final report and evaluating the candidate

The Challenge: The Need for Speed, Personalization, and Responsiveness

Hiring the right talent is the most critical and challenging task for any team. It’s no wonder that OpenAI CEO, Sam Altman famously advises that leaders spend ‘between a third and a half of their time hiring.” However, hiring is no cookie-cutter task, and traditional interview processes often lack scalability, consistency, and personalization. While AI solutions such as AI-powered interview bots exist, they struggle where it matters most: real-time personalization and responsiveness. Slow inference speeds disrupt the flow of the interview but also create a frustrating experience for candidates, leading to disengagement and potential drop-off. AI interviewers are also forced to rely on static, pre-generated question sets, sacrificing the customization that could evaluate candidates on a deeper level.

Meet AIBI, an AI Bot recruiter that handles the entire end-to-end recruiting process. Originally powered by GPT4o, AIBI switched to running Llama70B on Cerebras, slashing latency by 72% latency reduction and dramatically improving the candidate experience. With traditional models like GPT-4o, candidates experienced several second delays for each generated response, with further delays to convert the responses to speech (mp3). Follow up questions faced even more friction — up to 10 seconds of waiting per interaction.

With Cerebras inference, AIBI had an average latency reduction of 75%.

How AIBI was Built

AIBI’s architecture is split into two parts: (1) an HR Dashboard Platform and (2) an AI Interviewer bot.

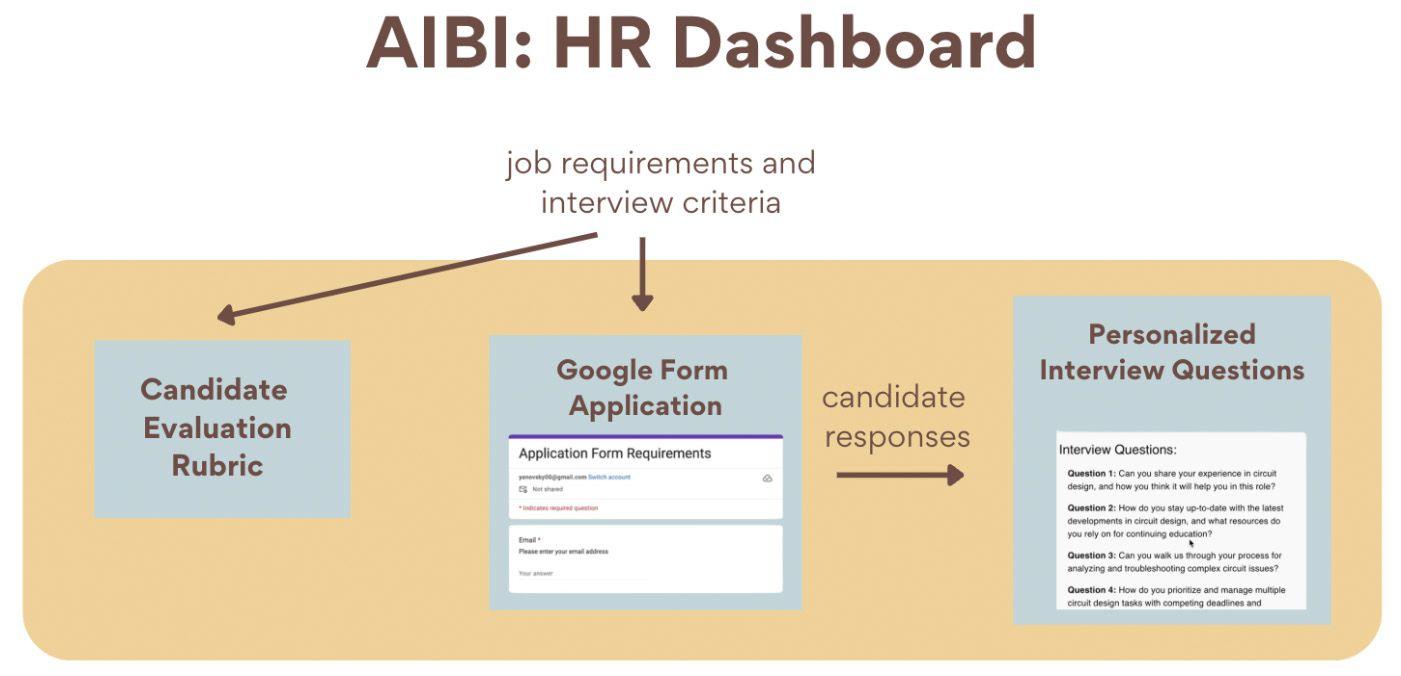

The HR Dashboard Platform

The HR dashboard platform generates a customized job application Google form, preliminary interview questions, and a post-interview grading report.

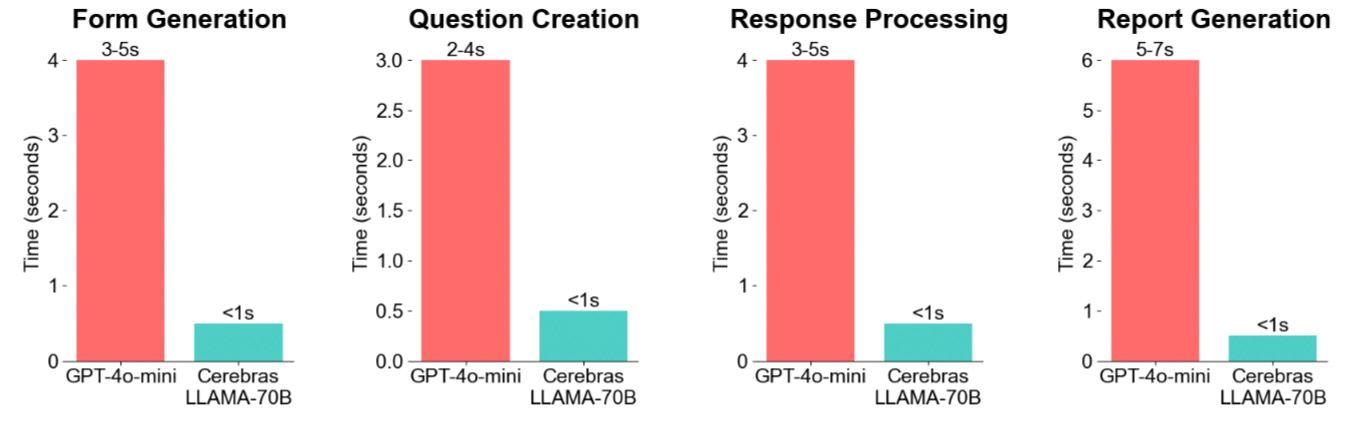

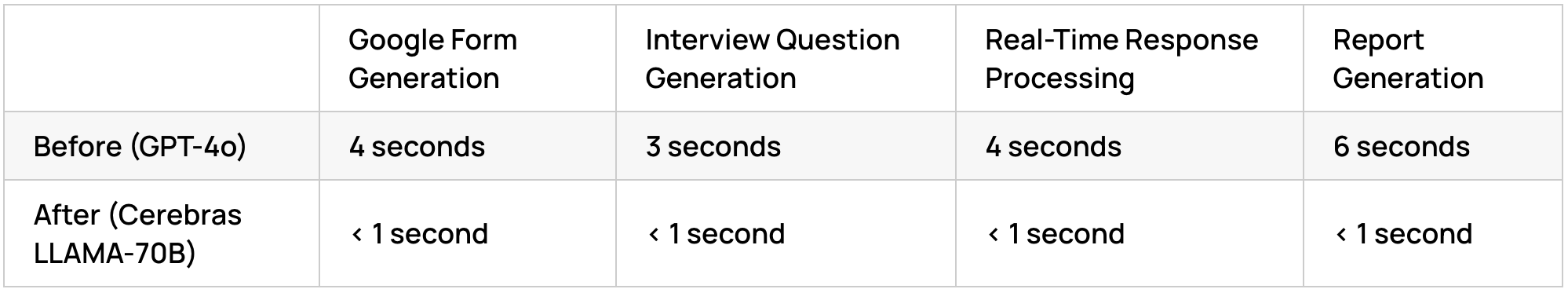

To generate the customized job application form, a job description is passed to the LLM, which then outputs a 15-30 question long JSON that can be directly parsed into a Google Form. When switching from GPT-4o structured to LlaMa 3.1-70B with Cerebras, this step was reduced from 4 seconds to <1 second. A 75% latency reduction.

Once the applicant fills out this google form, the responses are passed into the LLM to generate a preliminary set of unique, applicant-specific questions for the AI bot Interviewer. When switching from GPT-4o to LlaMa 3.1-70B with Cerebras, this step was reduced from 3 seconds to <1 second. A 66% latency reduction.

Lastly, the HR dashboard also creates an evaluation rubric for the specific role based on the job requirements. Switching from GPT 4o to LLaMA 3.1-70B on Cerebras led to 75% reduction in latency.

Overall, the average latency reduction for the HR Dashboard part is 72%.

The AI Interviewer Bot

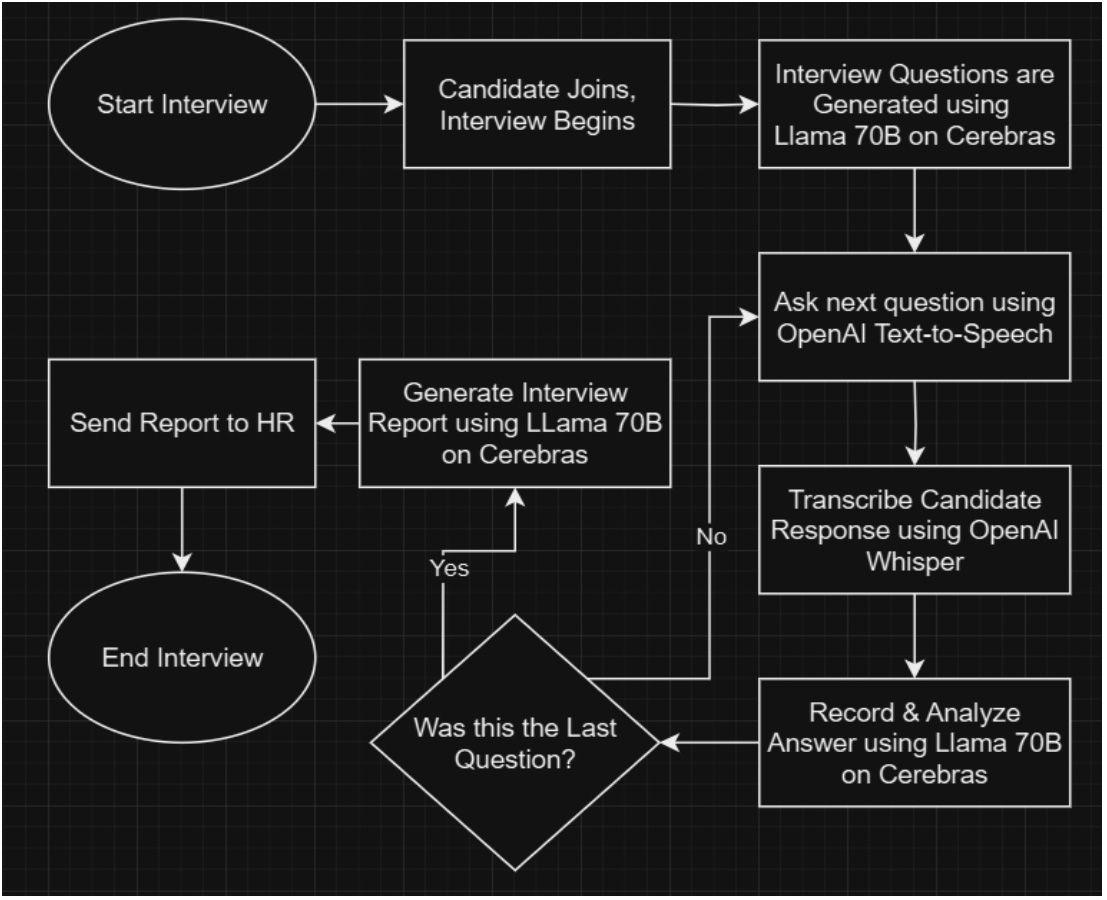

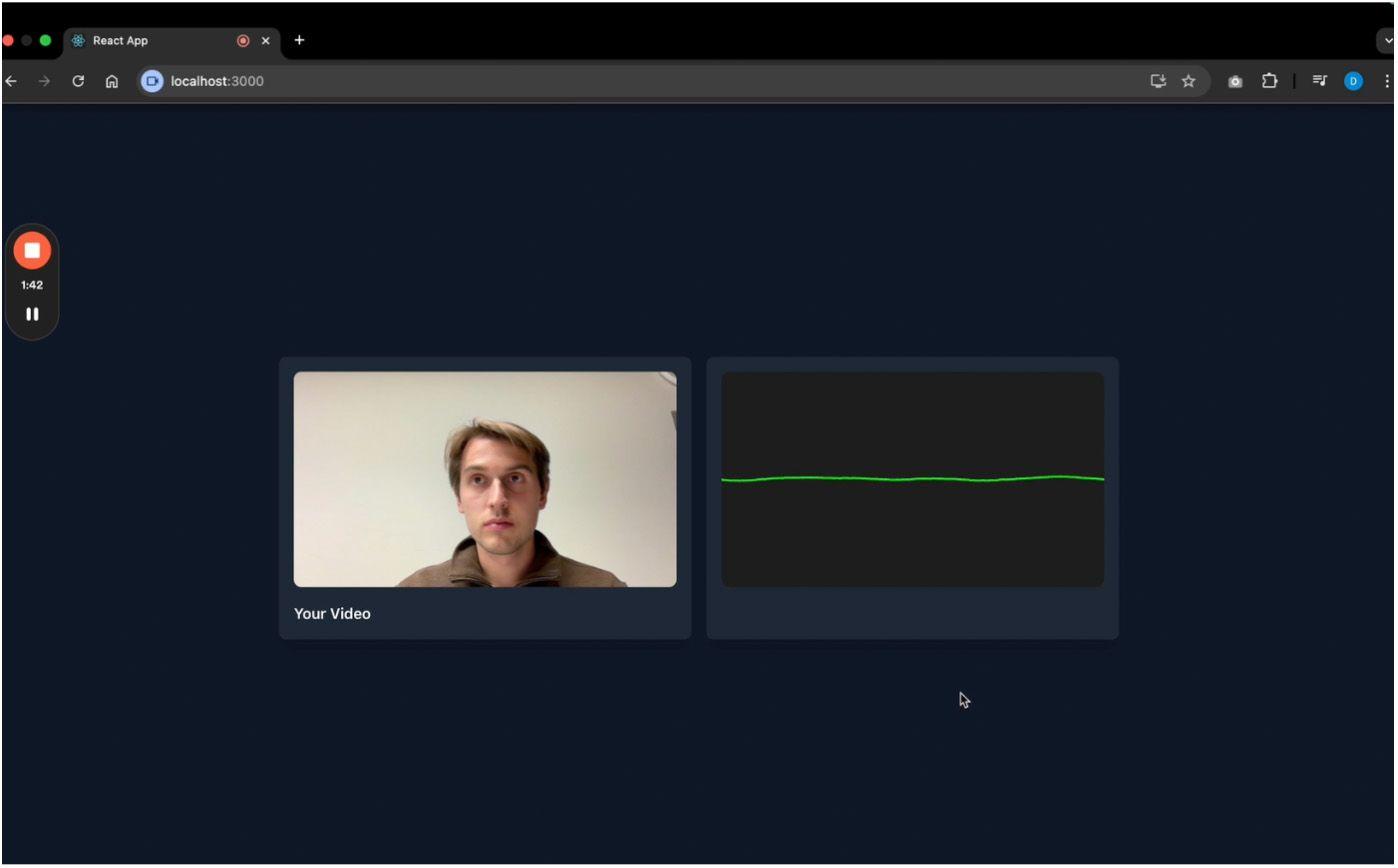

The AI Interviewer Bot conducts the audio interview with the candidate. Once the candidate joins the interview, they are greeted with a pre-recorded message explaining the rules of the interview and requesting consent. After the user consents, the bot begins asking uniquely generated questions.

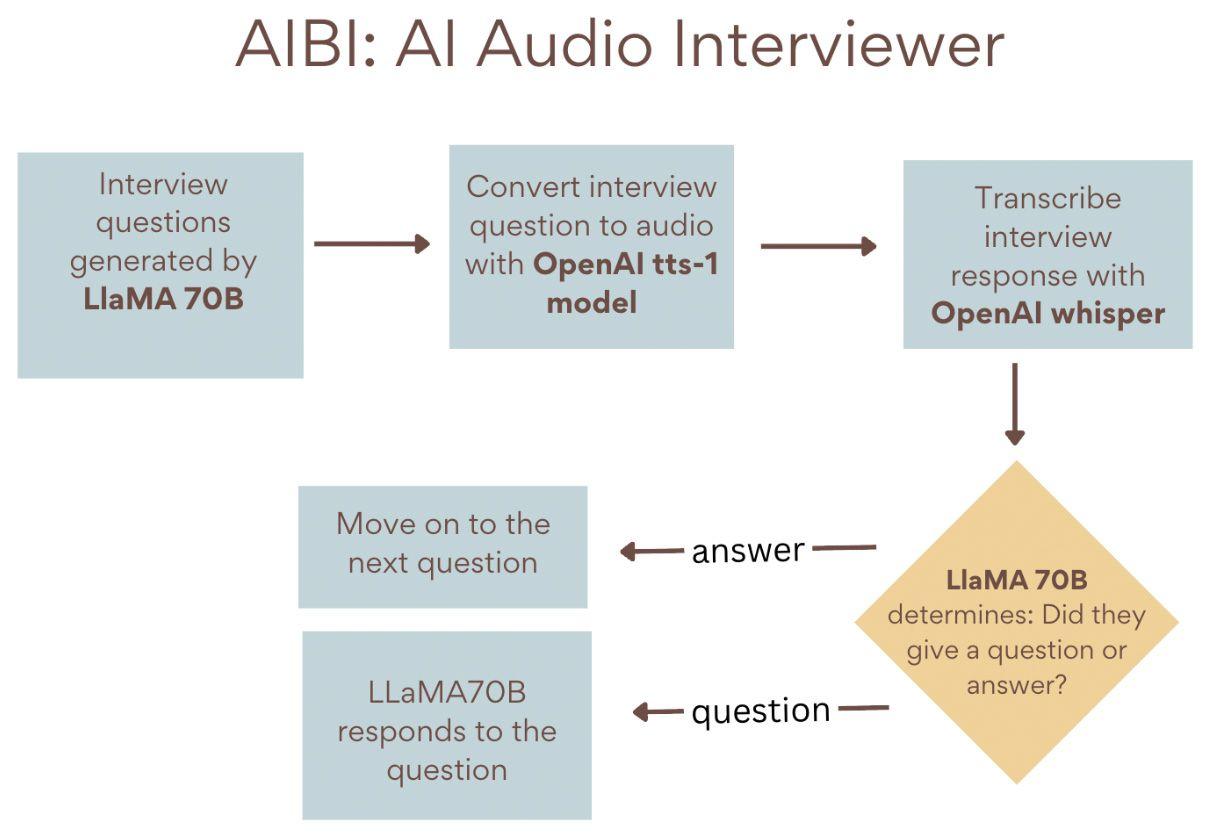

Asking the question

For each interview question generated by LlaMA-70B running on Cerebras, the question is converted into an audio file using OpenAI’s text-to-speech (TTS) ‘tts-1’ model. This audio file is then sent to the front end and played to the candidate. The candidate then has up to 2 minutes to respond.

Candidate Response

As the candidate responds, the audio is transcribed to text using OpenAI’s speech-to-text (STT) model ‘whisper-1’. The transcribed text is then passed back to the LLM to determine whether it is an answer or a question (e.g. a request for clarification). If it is a question, the transcribed conversation is passed to the LLM as context to generate an answer to the candidate’s question. Otherwise, the AI Interview Bot will continue with the next question.

Before switching to Cerebras Inference, this series of LLM calls gave candidates a frustratingly slow experience. It took 1-2 seconds for GPT-4o to determine if the user’s response contained a question, followed by another ~3 seconds to generate a response. Each step was reduced to <1 second with Cerebras.

Overall, the average latency reduction for the AI audio interviewer is 75%.

Here’s a breakdown of the drastic performance improvements achieved when switching from GPT-4o to LLAMA-70B running on Cerebras

What’s next?

AIBI is just getting started, and will continue to grow as an efficient, realistic, and intelligent interviewing tool. Some exciting areas of exploration include

- Adding AI-generated video to the interview bot to create a face-to-face experience

- Expanding the system’s capacity to handle multi-round interviews and follow-ups

- Implementing more sophisticated agents to adjust the interview based on the candidate’s responses, allowing for dynamic interview paths.

If you have any feature suggestions or would like to contribute to this project, check out the code here: https://github.com/yanovsk/ai_interviewer_dashboard_cerebras

Cerebras inference is powering the next generation of AI applications — 70x faster than on GPUS. The Cerebras x Bain Capital Ventures Fellows Program invites engineers, researchers, and students to build impactful, next-level products unlocked by instant AI. Learn more at cerebras.ai/fellows