James Wang

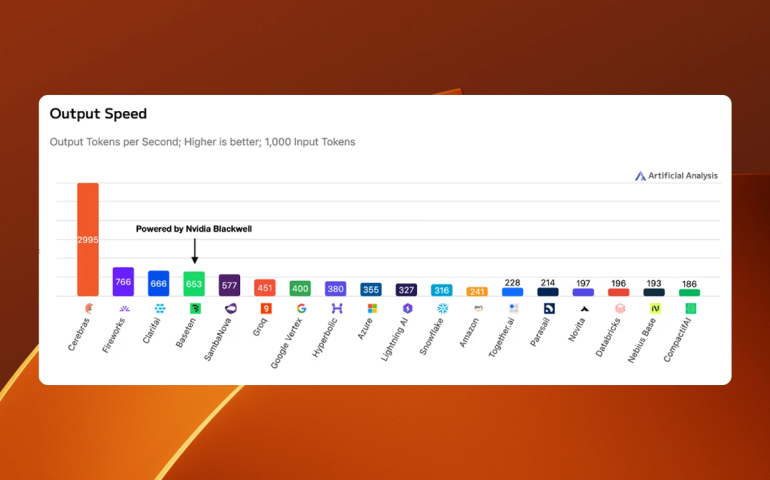

OpenAI GPT-OSS 120B Benchmarked – NVIDIA Blackwell vs. Cerebras

November 06, 2025

Blog

Cerebras Inference: Now Available via Pay Per Token

October 13, 2025

Blog

NinjaTech AI Unveils World’s Fastest Deep Research: Revolutionizing AI-Powered Information Analysis

August 15, 2025

Press Release

OpenAI GPT OSS 120B Runs Fastest on Cerebras

August 06, 2025

Blog

Qwen3 Coder 480B is Live on Cerebras

August 01, 2025

Blog

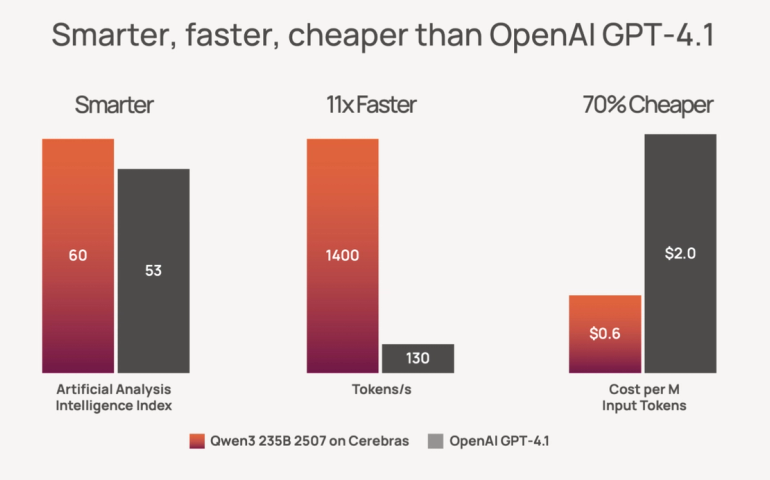

Qwen3 235B 2507 Instruct Now Available on Cerebras

July 29, 2025

Blog

The Cerebras Scaling Law: Faster Inference Is Smarter AI

June 11, 2025

Blog

Realtime Reasoning is Here - Qwen3-32B is Live on Cerebras

May 15, 2025

Blog

Deep Research with Cerebras and NinjaTech

April 09, 2025

Blog

Cerebras brings instant inference to Mistral Le Chat - Cerebras

February 06, 2025

Blog

Cerebras Launches World's Fastest DeepSeek R1 Llama-70B Inference

January 29, 2025

Blog

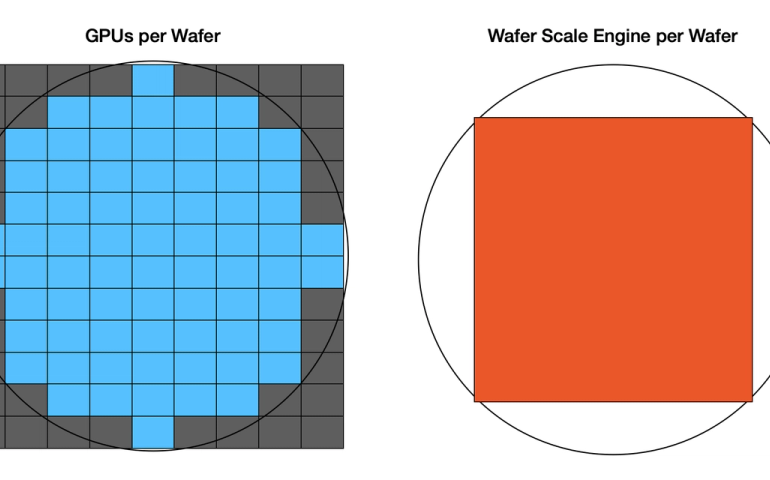

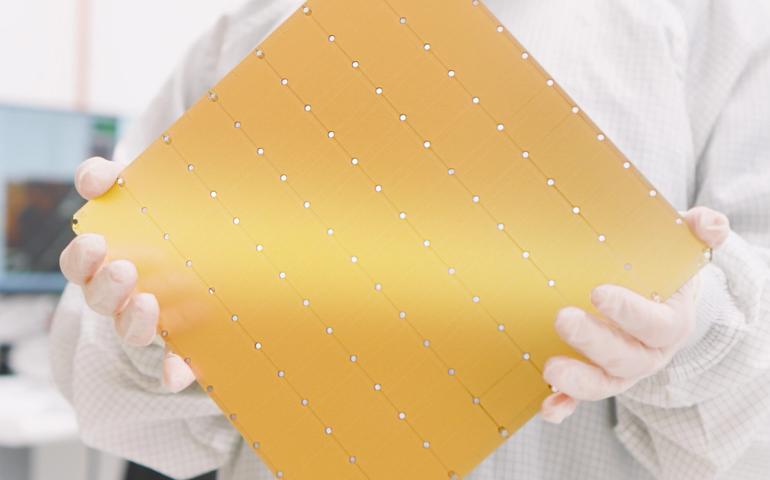

100x Defect Tolerance: How Cerebras Solved the Yield Problem - Cerebras

January 13, 2025

Blog

Five Reasons to Join Cerebras in 2025 - Cerebras

January 01, 2025

Blog

Llama 3.1 405B now runs at 969 tokens/s on Cerebras Inference - Cerebras

November 18, 2024

Blog

Cerebras Inference now 3x faster: Llama3.1-70B breaks 2,100 tokens/s - Cerebras

October 24, 2024

Blog

Introducing Cerebras Inference: AI at Instant Speed - Cerebras

August 27, 2024

Blog

Introducing Sparse Llama: 70% Smaller, 3x Faster, Full Accuracy - Cerebras

May 15, 2024

Blog

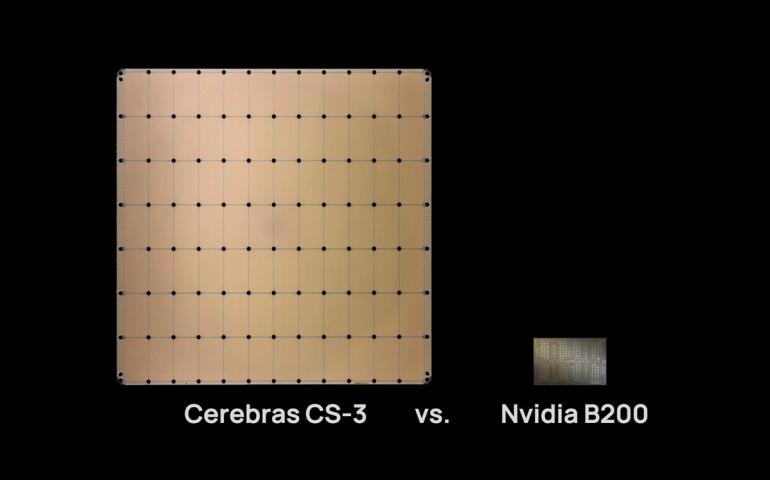

Cerebras CS-3 vs. Nvidia B200: 2024 AI Accelerators Compared - Cerebras

April 12, 2024

Blog

Cerebras CS-3: the world’s fastest and most scalable AI accelerator - Cerebras

March 12, 2024

Blog

Cerebras, Petuum, and MBZUAI Announce New Open-Source CrystalCoder and LLM360 Methodology to Accelerate Development of Transparent and Responsible AI Models - Cerebras

December 14, 2023

Press Release

Cerebras Software Release 2.0: 50% Faster Training, PyTorch 2.0 Support, Diffusion Transformers, and More

November 10, 2023

Blog

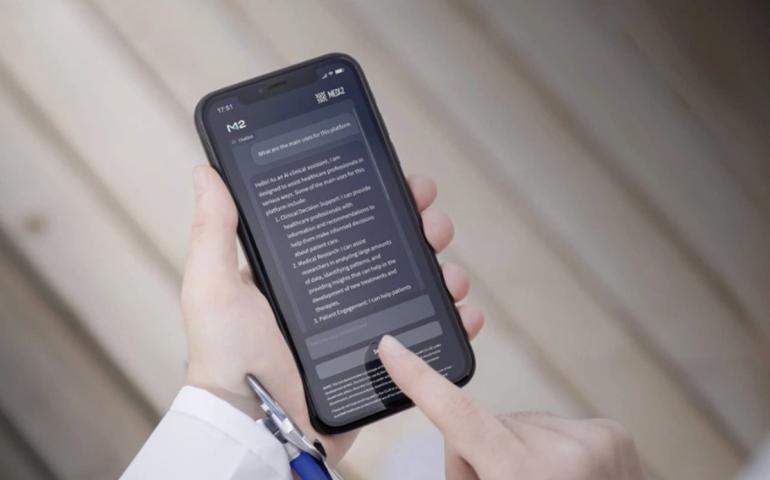

How we fine-tuned Llama2-70B to pass the US Medical License Exam in a week

October 12, 2023

Blog

Introducing Condor Galaxy 1: a 4 exaFLOPS Supercomputer for Generative AI

July 20, 2023

Blog