Get Instant

AI Inference

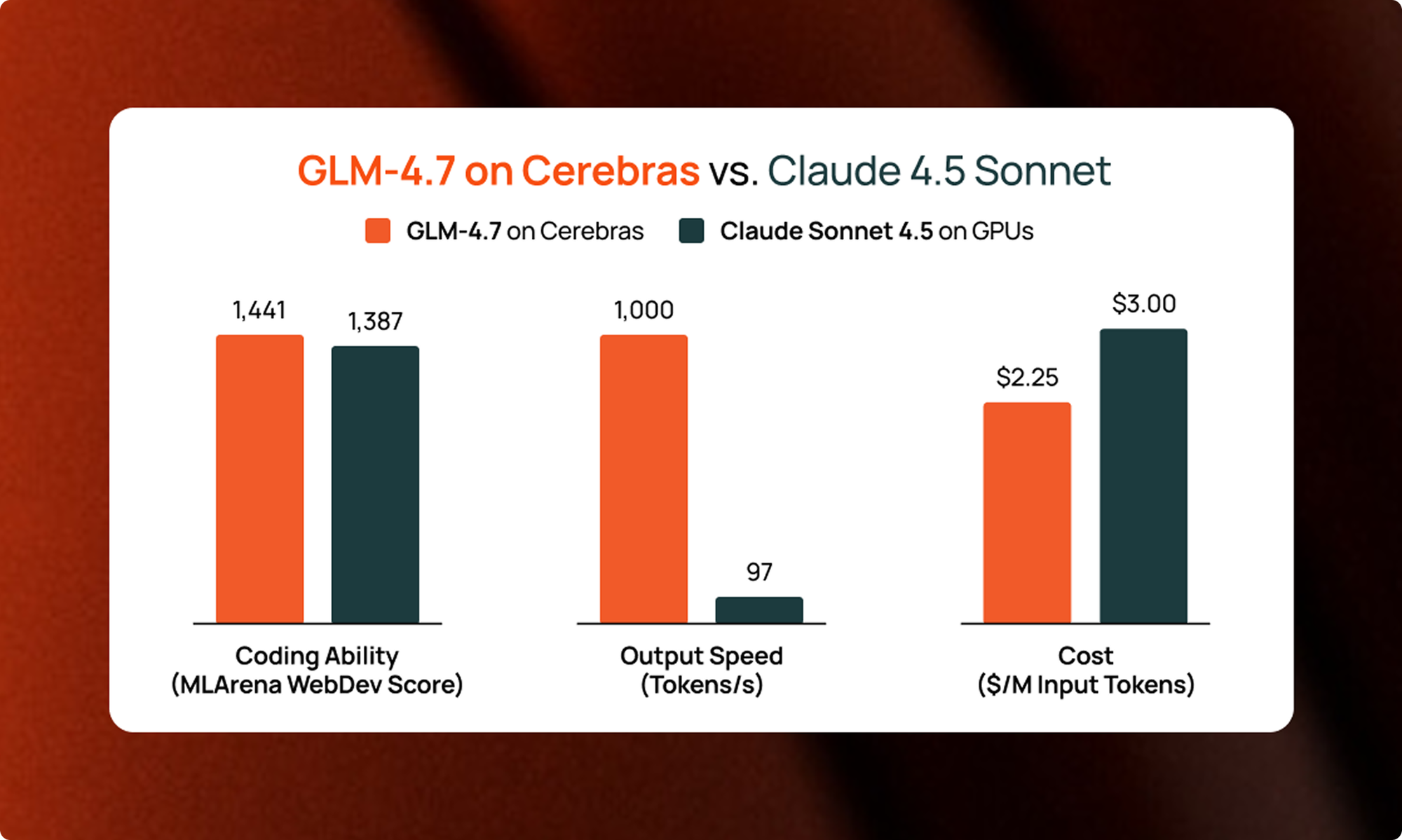

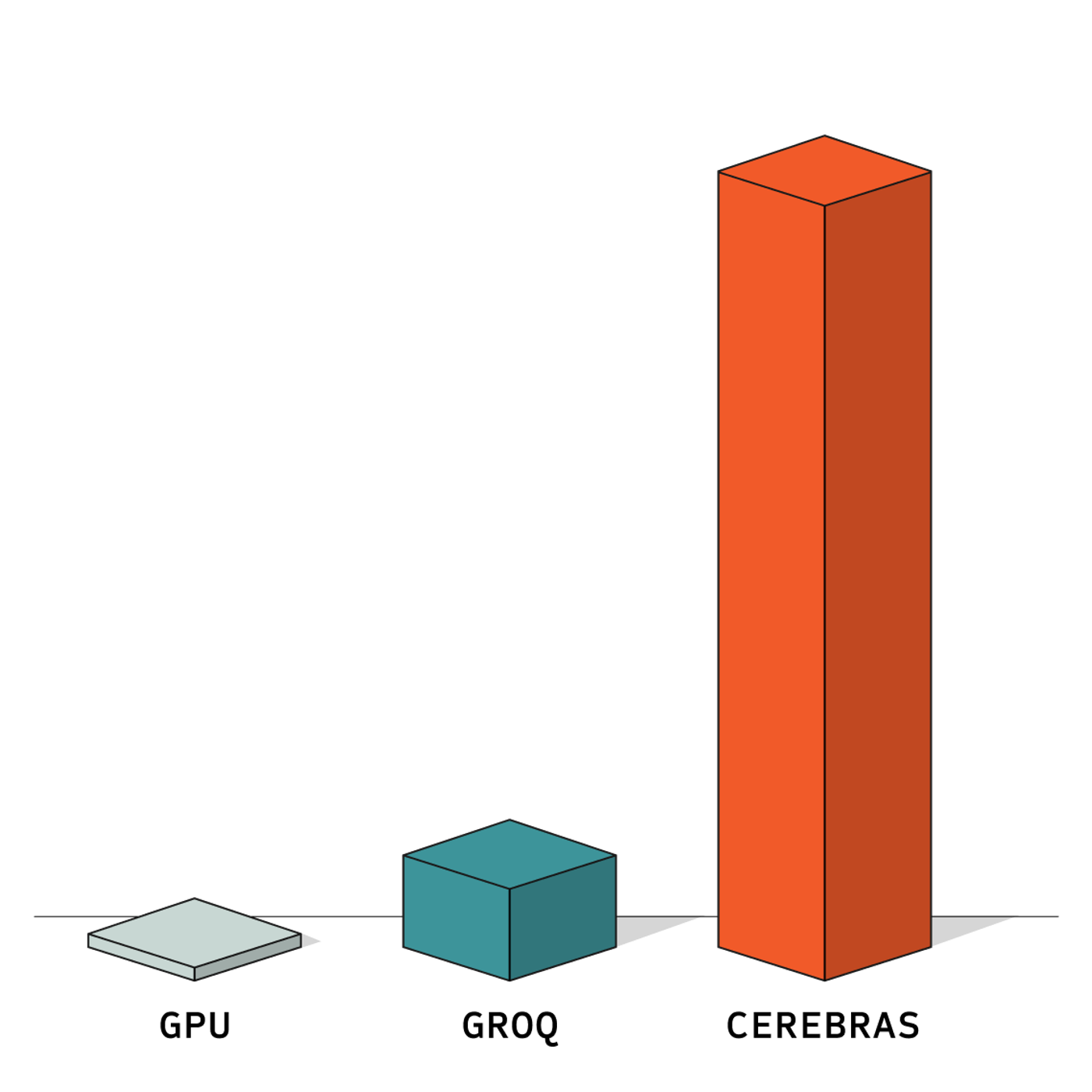

Experience blazing fast AI responses for code generation, summarization, and autonomous tasks with the world’s fastest AI inference.

Features

Straightforward Pricing

Cerebras Inference offers flexible, transparent pricing

designed for everyone—from startups to global enterprises.

Pay Per Hour

Scale usage based on your training needs. Simply share your AI workload, & we’ll determine the time needed to train, fine-tune, and deploy your model.

Pay Per Model

No time to train? Let our AI experts design, train, and fine-tune a state-of-the-art generative AI solution tailored to your dataset.

Want to know more?

No time to train? Let our AI experts design, train, and fine-tune a state-of-the-art generative AI solution tailored to your dataset.

Customer Stories

"With Cerebras’ inference speed, GSK is developing innovative AI applications, such as intelligent research agents, that will fundamentally improve the productivity of our researchers and drug discovery process."

"DeepLearning.AI has multiple agentic workflows that require prompting an LLM repeatedly to get a result. Cerebras has built an impressively fast inference capability which will be very helpful to such workloads."

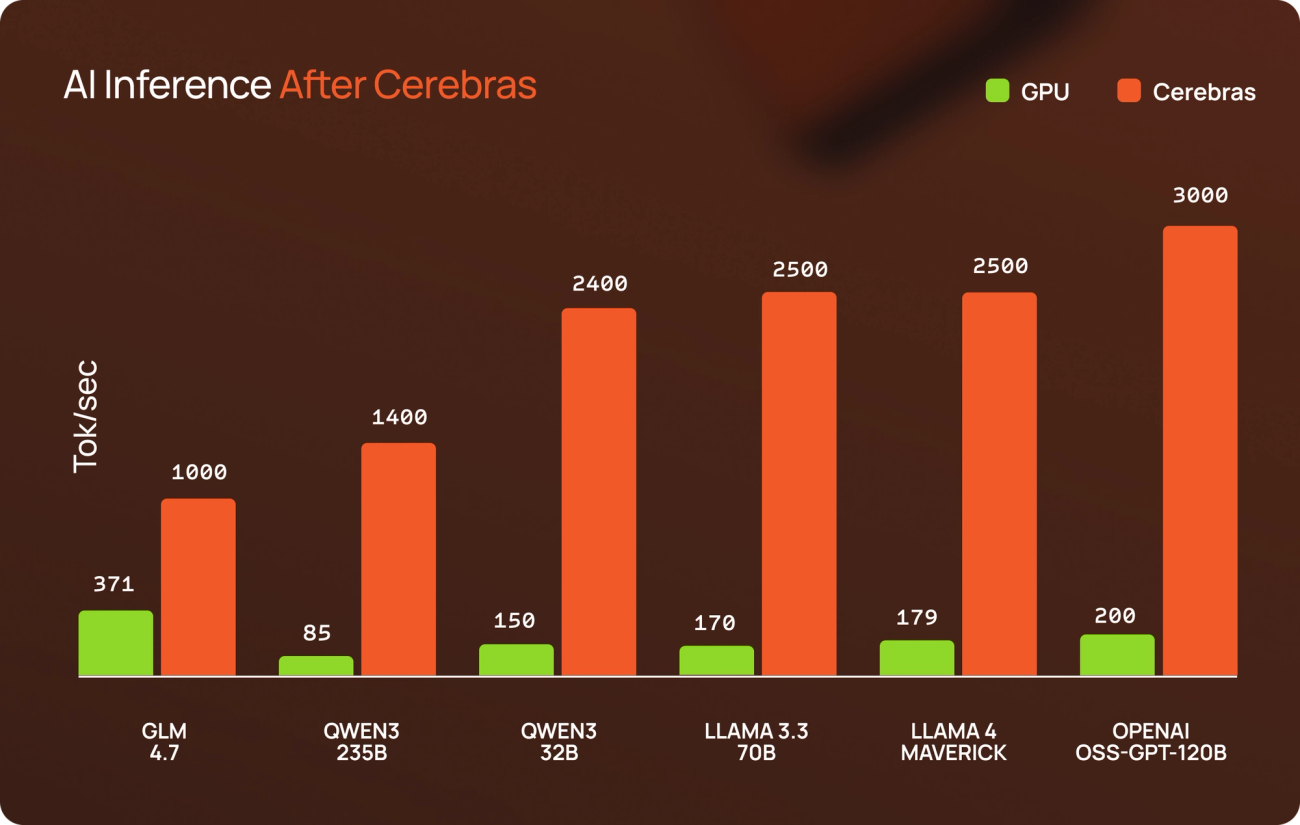

"We’re excited to share the first models in the Llama 4 herd and partner with Cerebras to deliver the world’s fastest AI inference for them, which will enable people to build more personalized multimodal experiences. By delivering over 2,000 tokens per second for Scout – more than 30 times faster than closed models like ChatGPT or Anthropic, Cerebras is helping developers everywhere to move faster, go deeper, and build better than ever before."

"For traditional search engines, we know that lower latencies drive higher user engagement and that instant results have changed the way people interact with search and with the internet. At Perplexity, we believe ultra-fast inference speeds like what Cerebras is demonstrating can have a similar unlock for user interaction with the future of search - intelligent answer engines."

By partnering with Cerebras, we are integrating cutting-edge AI infrastructure […] that allows us to deliver the unprecedented speed, most accurate and relevant insights available – helping our customers make smarter decisions with confidence.