Get Instant

AI Inference

Experience real-time AI responses for code generation, summarization, and autonomous tasks with the world’s fastest AI inference.

OpenAI on Cerebras

3,000 tokens/sec

OpenAI’s new open frontier reasoning model GPT-OSS-120B is now live on Cerebras, delivering the best of GenAI – openness, intelligence, speed, cost, and ease of use – without compromises

QWEN 3 FAMILY

Developed by Alibaba, features state-of-the-art open-weight models built for real-world performance — including powerful reasoning, code generation, agentic workflows, and tool use.

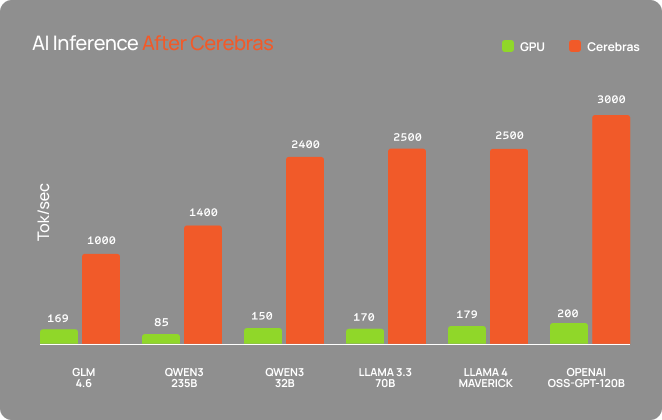

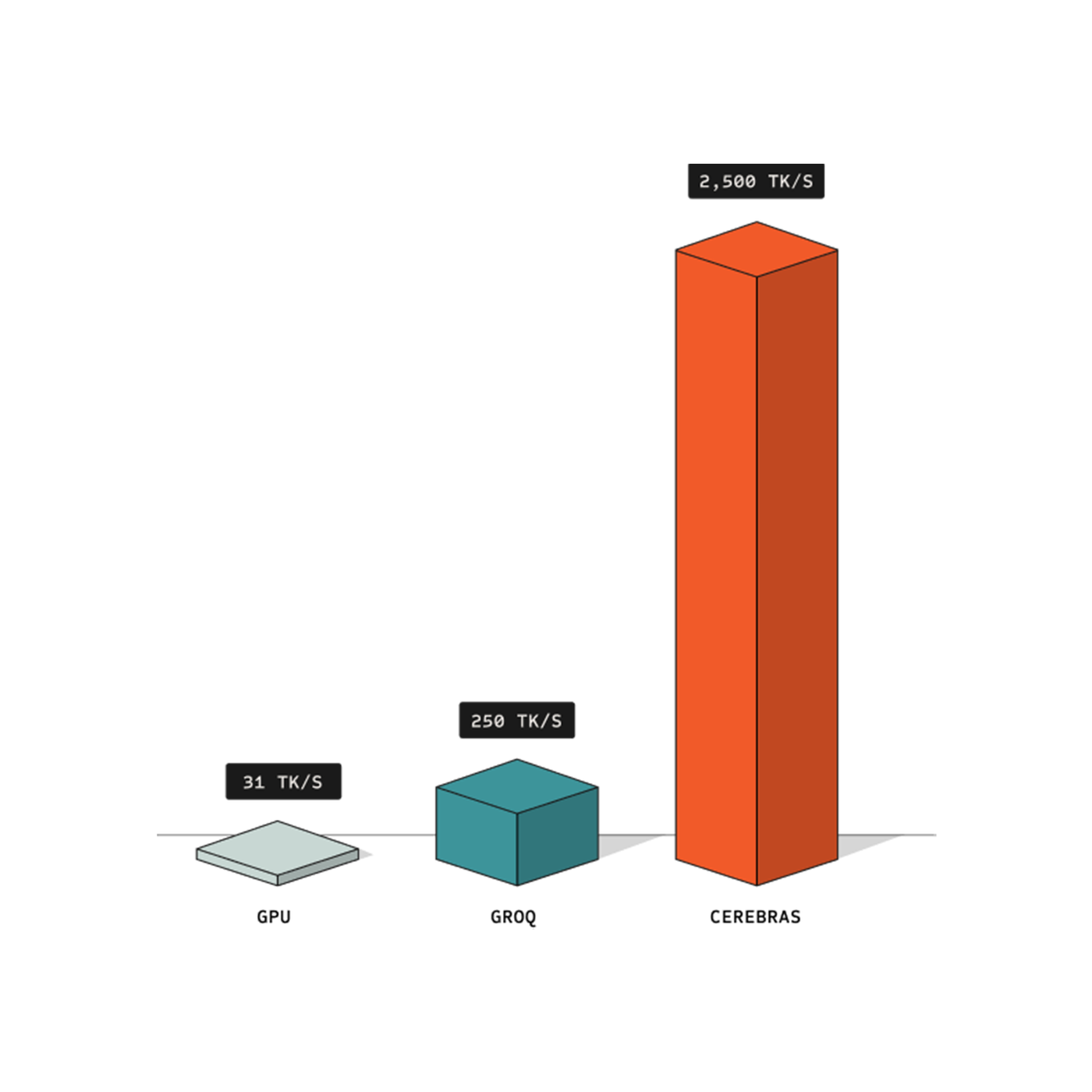

70X Faster than Leading GPUs

With processing speeds exceeding 2,500 tokens per second, Cerebras Inference eliminates lag, ensuring an instantaneous experience from request to response.

High Throughput, Low Cost

Built to scale effortlessly, Cerebras Inference handles heavy demand without compromising speed—reducing the cost per query and making enterprise-scale AI more accessible than ever.

Leading Open Models

Llama 3.1 8B, Llama 3.3 70B, Llama 4 Scout, Llama 4 Maverick, DeepSeek R1 70B Distilled, Qwen 3-32B, Qwen 3-235B and more coming soon.

Real-Time Agents

Chain multiple reasoning steps instantly, letting your agents complete more tasks and deeper workflows.

Instant Code-Gen

Generate full features, pages, or commits in a single shot — no token-by-token delays, zero wait time.

Reasoning in under 1 second

No more waiting minutes for a full answer — Cerebras runs full reasoning chains and returns the final answer instantly.

Pricing & Plans

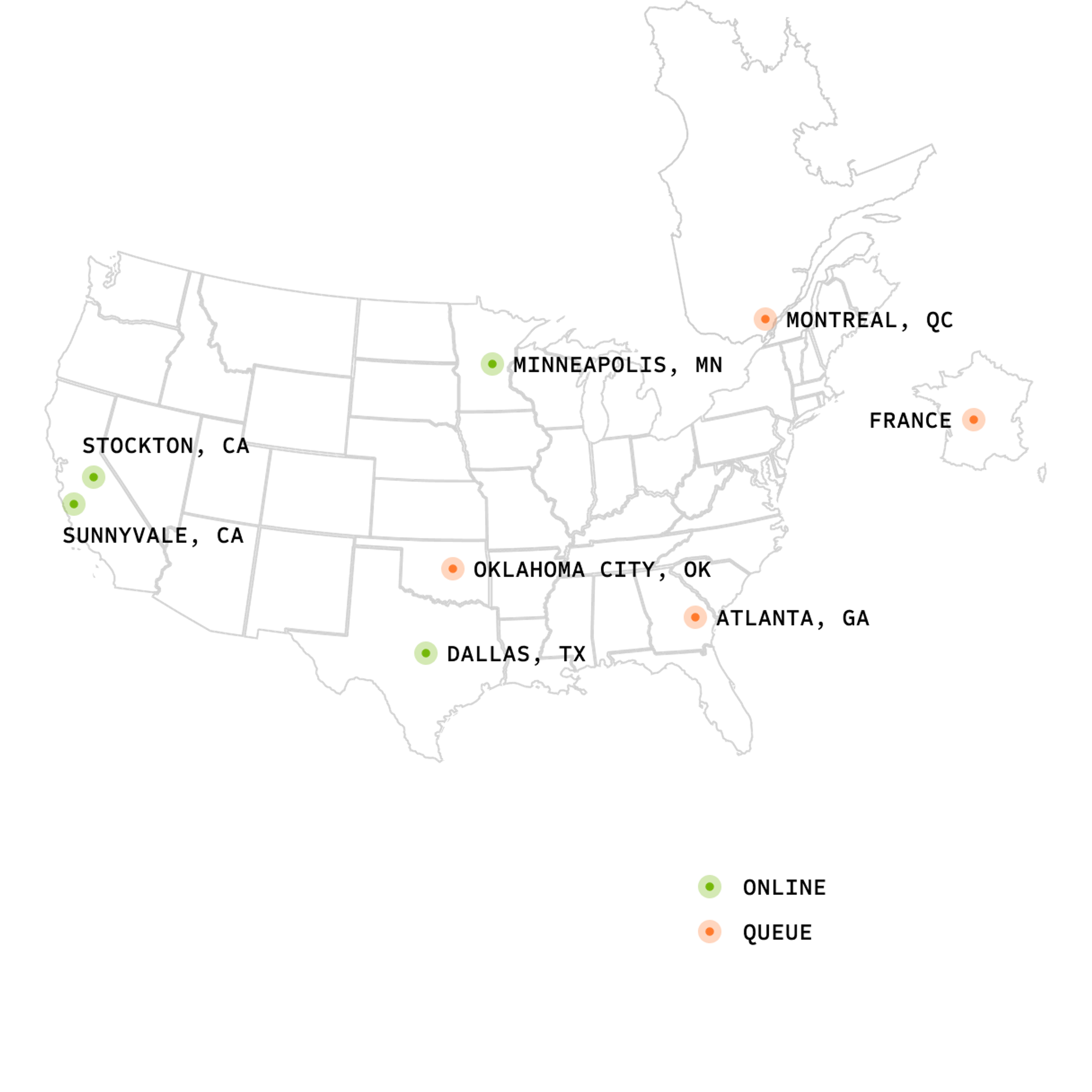

Cerebras Inference offers flexible, transparent pricing designed for everyone—from startups to global enterprises. Choose pay-as-you-go rates per million tokens or reserve dedicated capacity for your custom fine-tuned models, all powered by our data centers in North America.

The fastest way to deploy Llama models - Run Llama 3.3, Llama 4 Scout, and Llama 4 Maverick—powered by Cerebras, available now.

AlphaSense, powered by Cerebras, delivers this advantage with unprecedented speed and accuracy.

With Cerebras Inference, Tavus is building real-time, natural conversation flows for its digital clones.

Ultra Fast Inference at Scale

Cerebras is scaling AI infrastructure like never before with 6 new datacenters strategically located across the U.S. and Europe. Powered by thousands of Cerebras CS-3 systems, these 8 cutting-edge facilities will serve over 40 million tokens per second by the end of 2025, making Cerebras the world leader in high-speed inference.

"With Cerebras’ inference speed, GSK is developing innovative AI applications, such as intelligent research agents, that will fundamentally improve the productivity of our researchers and drug discovery process."

Kim Branson

SVP of AI and ML, GSK

"DeepLearning.AI has multiple agentic workflows that require prompting an LLM repeatedly to get a result. Cerebras has built an impressively fast inference capability which will be very helpful to such workloads."

Andrew NG

Founder, DeepLearning.AI

"We’re excited to share the first models in the Llama 4 herd and partner with Cerebras to deliver the world’s fastest AI inference for them, which will enable people to build more personalized multimodal experiences. By delivering over 2,000 tokens per second for Scout – more than 30 times faster than closed models like ChatGPT or Anthropic, Cerebras is helping developers everywhere to move faster, go deeper, and build better than ever before."

Ahmad Al-Dahle

VP of GenAI at Meta

"For traditional search engines, we know that lower latencies drive higher user engagement and that instant results have changed the way people interact with search and with the internet. At Perplexity, we believe ultra-fast inference speeds like what Cerebras is demonstrating can have a similar unlock for user interaction with the future of search - intelligent answer engines."

Denis Yarats

CTO and co-founder, Perplexity